Hi,

currently used probes in the helm-chart on “:3000/” return a 200 even if the rails server isn’t fully started up. This leads to traffic routed to unready services, 504 errors and therefore breaks the complete advantages of high availability with Kubernetes. As far as i saw there is no alternative endpoint for this usecase.

The solution would be to provide a health endpoint which returns 200 only when the rails server is ready to accept connections.

Rails itself already has this feature included, but it seams to not be available through Zammad at the moment: Rails::HealthController

I think that would be a great improvement for the stability of Zammad.

Differentiation of Zammad Monitoring: Requires auth token and returns the health of the complete setup not a single container

@simonhir thanks for the proposal! To clarify this first, I would like to understand better what the issue is. Currently, we have

readinessProbe:

httpGet:

path: /

port: 3000

failureThreshold: 5

timeoutSeconds: 5

This does perform a regular HTTP call to the Rails application, so I would assume that it has to have booted first before answering the request here. Is this not the case? What exactly happens when it goes wrong?

@simonhir I just tried to reproduce this unsuccessfully.

To do this, I placed an artificial delay in one of our intializer files (sleep 10) to prolong the startup period. When starting the puma web server, it only starts listening on the port after the initialization period is completed. During that period, HTTP calls fail:

curl localhost:3000

curl: (7) Failed to connect to localhost port 3000 after 0 ms: Couldn't connect to server

Afterwards, the correct content is immediately served. Therefore I wonder if the described issue actually exists, since the readiness probe does perform HTTP calls.

1 Like

@mgruner thanks for the detailed answer and sorry for my delayed reply.

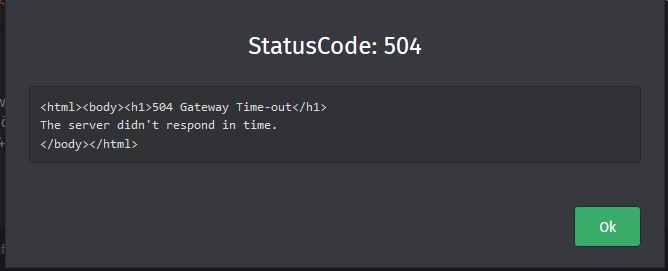

For context: I created this request as we received 50X during roll-outs of updates (as popup in UI and as full page). It was gone after some seconds but that got me thinking that this can only come from a not valid health check (sorry for the probably hasty conclusion). As not every user seems to receive this messages and not every update seems to trigger this behavior it’s unfortunately very difficult to analyse.

I will get back as soon as I have more information or close this if the problem no longer occurs, if that is okay.