Infos:

- Used Zammad version: 3.1.0

- Used Zammad installation source: packager.io stable branch

- Operating system: debian 9

- Browser + version: current chrome, firefox, edge

Expected behavior:

- Anything I do should happen without lag

Actual behavior:

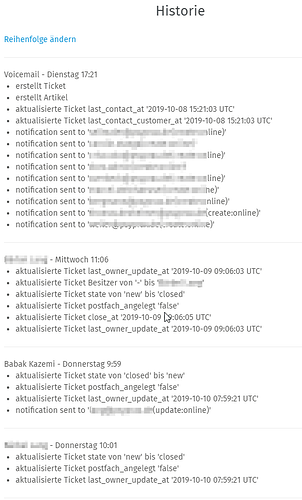

- changing owner, changing state, changing group sometimes very slow.

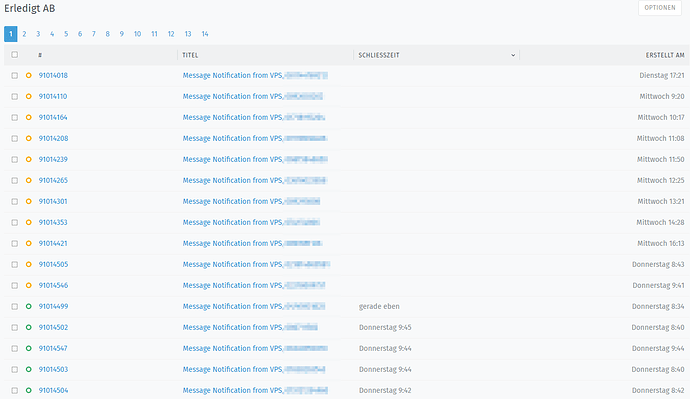

We have ~5-6 concurrent agents

Our system specs: VMware vSphere Host machine with AMD EPYC 7351P, 128GB RAM, iSCSI Target: Synology FS1018 with 6 Samsung SM863a SSDs. Zammad VM has 4 cores, 24GB RAM, 50GB SSD. We are having 4x100% CPU load (each core 100%) 40-50% the time, especially when:

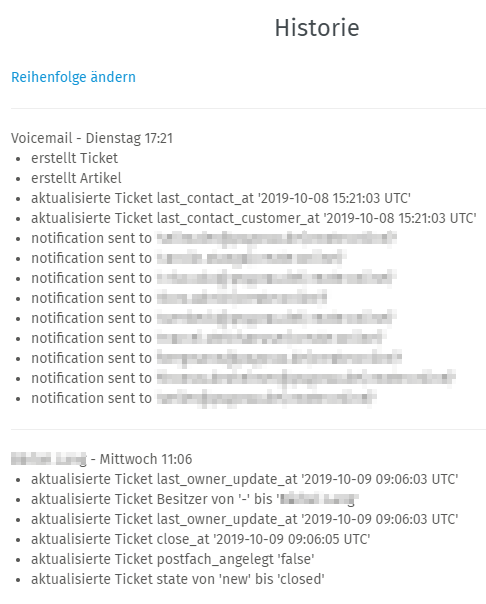

- new ticket is created via mail

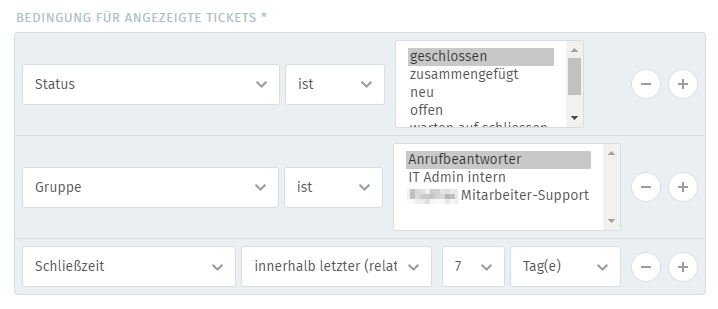

- user is changing overview

- user is changing ticket state

- user is adding an article to a ticket

etc

everytime we have these “spikes” (lasting for 10-20 seconds), we have several ruby processes eating up 100% cpu time each.

We have set WEB_CONCURRENCY=4, that has helped a bit, after this we raised the DB connections because of timeouts. Also we have changed storage from DB to FS, this also boosted performance marginally.

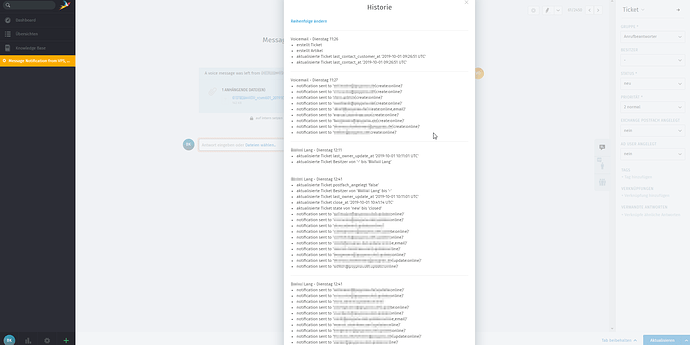

Every time the system becomes sluggish, Delayed::Job.count raises to something like 200-300, jobs are slowly processed and when it is down at 100, system is responsive again.

Steps to reproduce the behavior:

- use zammad