Infos:

- Used Zammad version: 6.5.0-173

- Used Zammad installation type: Docker compose

- Operating system: Ubuntu

- Browser + version:

- Tried rake rebuild ES

- Reboot

- Update to most recent docker compose version

- Check first delayed job + count (0 and nil)

Expected behavior:

- I would expect the background worker to use more RAM on specific moments, a couple times a week.

Actual behavior:

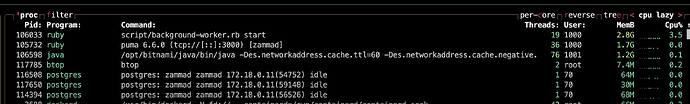

- Background worker/puma will use all the RAM it can get after an hour or so and never go down (24/7 high usage)

Steps to reproduce the behavior:

- Deploy the docker-compose setup

The machine has 8GB of ram. I saw other posts where elasticsearch was eating all the ram. How can I find out what the background worker is working on that makes it consume so much ram? Do we just need to upgrade or is there something hanging?

I can corroborate this report, though we have a central Postgres and ElasticSearch, i.e. the machine with Zammad on it only has Zammad, memcached and redis on it. We are seeing 2,2GB RAM usage for the background worker after less than 48 hours on a low-traffic Zammad (that was 6 mails and probably less that 10 agents in that timeframe).

The response to some other reports were for example that this is inherent to how Ruby and Rails works is not something I’m satisfied with (we host a rails app at my day job and while I wouldn’t say we don’t leak memory we’re talking thousands of jobs before restarting the worker because it hits a ~500MB ceiling…), I’m also cognisant of the fact that this is not easy to trace. It is also a problem that indeed exists across the RoR community (there’s plethora of gems to restart processes after some condition for example memory usage is hit).

Now on to solutions, the above linked gems won’t work as Zammad has hand-rolled background processing. I guess I’ll explore limiting the RAM for the zammad-scheduler container and forcing restarts that way.

EDIT: clarified that the background-worker has a very high memory usage.