Infos:

- Used Zammad version: 3.6.0

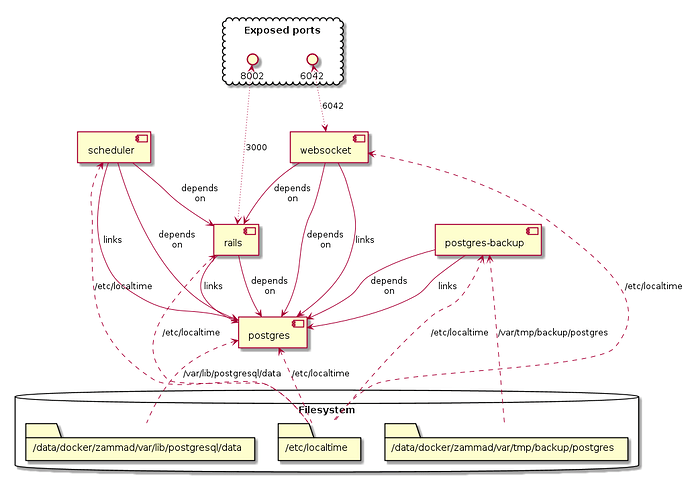

- Used Zammad installation source: source within custom Docker containers

- Operating system: Host: Ubuntu 20.04, Docker containers based on ubuntu:20.04

- Browser + version: Firefox 84.0.2 (on Ubuntu 20.04)

Expected behavior:

Scheduled tasks are conducted and the webinterface is updated properly (considering the task state).

Actual behavior:

Scheduled tasks are run by the scheduler as expected, but the the rest of Zammad doesn’t seem to get notified about it.

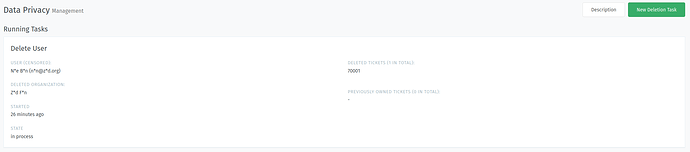

Use case 1:

A user is deleted, the deletion task is run properly by the scheduler, the state within the webinterface doesn’t get updated (stays at ‘in process’).

Steps to reproduce the behavior:

- Setup fresh Zammad

- Delete example user

- Watch the privacy data task state

Scheduler log

I, [2021-01-21T09:14:10.937793 #1-70327549038780] INFO -- : execute DataPrivacyTaskJob.perform_now (try_count 0)...

I, [2021-01-21T09:14:10.938268 #1-70327549038780] INFO -- : Performing DataPrivacyTaskJob (Job ID: 63bc340a-402a-4320-8aac-271579470f32) from DelayedJob(default)

I, [2021-01-21T09:14:12.557570 #1-70327549038780] INFO -- : Enqueued TicketCreateScreenJob (Job ID: 30abd6f2-a1a8-45c5-9525-bc3bd3908244) to DelayedJob(default) at 2021-01-21 08:14:22 UTC

I, [2021-01-21T09:14:12.593244 #1-70327549038780] INFO -- : Performed DataPrivacyTaskJob (Job ID: 63bc340a-402a-4320-8aac-271579470f32) from DelayedJob(default) in 1654.85ms

I, [2021-01-21T09:14:12.593433 #1-70327549038780] INFO -- : ended DataPrivacyTaskJob.perform_now took: 1.662182316 seconds.

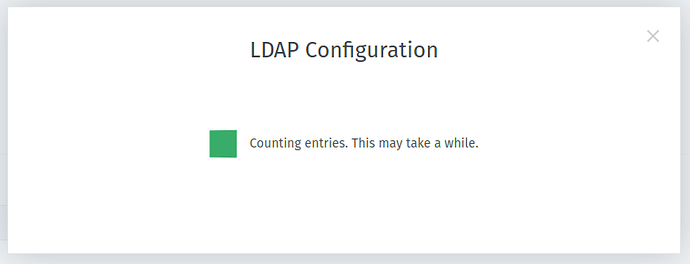

Use case 2:

LDAP integration is configured, users should be imported, configuration dialog stays at ’ Counting entries. This may take a while.’ indefinitely.

Steps to reproduce the behavior:

- Setup fresh Zammad

- Configure LDAP integration for the first time

Scheduler log

I, [2021-01-21T09:28:08.346334 #1-47249299742760] INFO -- : 2021-01-21T09:28:08+0100: [Worker(host:a7129ab8f5ce pid:1)] Job AsyncImportJob [13ab6015-e3c1-49d4-b238-834930b3a1e3] from DelayedJob(default) with arguments: [{"_aj_globalid"=>"gid://zammad/ImportJob/1"}] (id=25) (queue=default) RUNNING

I, [2021-01-21T09:28:08.375590 #1-47249299742760] INFO -- : Performing AsyncImportJob (Job ID: 13ab6015-e3c1-49d4-b238-834930b3a1e3) from DelayedJob(default) with arguments: #<GlobalID:0x00007fecd17385f0 @uri=#<URI::GID gid://zammad/ImportJob/1>>

I, [2021-01-21T09:28:09.192676 #1-47249299742760] INFO -- : Performed AsyncImportJob (Job ID: 13ab6015-e3c1-49d4-b238-834930b3a1e3) from DelayedJob(default) in 816.89ms

I, [2021-01-21T09:28:09.196384 #1-47249299742760] INFO -- : 2021-01-21T09:28:09+0100: [Worker(host:a7129ab8f5ce pid:1)] Job AsyncImportJob [13ab6015-e3c1-49d4-b238-834930b3a1e3] from DelayedJob(default) with arguments: [{"_aj_globalid"=>"gid://zammad/ImportJob/1"}] (id=25) (queue=default) COMPLETED after 0.8499

Additional infos:

All other parts of Zammad seem to work properly.

Docker stack:

On top of this stack, there is a central nginx server, conduction SSL encryption and forwarding the /ws requests to port 6042.

I suspect the problem to be a communication problem between the scheduler and the rails server, I cannot find any error in any log though.

How is the acknowledgement of successful scheduler tasks working?

I’m very thankful for every little hint!